Research Report

AI-Based Music to Dance Synthesis and Rendering

2024 S.T. Yau High School Science

Award

Name of team member:JIN Bohan

School: Bancroft School

City, Country: Worcester, USA

Name of supervising teacher: Yuxuan Zhou

AI Choreographer is a deep learning model that is able to generate dance motions according to music easily. However, several difficulties and weaknesses in the model still make it difficult to use. For example, the model generates realistic motions, but sometimes the motions are repetitive or do not respond to the audio correctly. Also, the model does not have a usable render that allows it to directly animate the provided models with generated data. We improved our base model to generate more realistic and better dance motions, and we also created a usable automated render pipeline to directly render the generated motions into an animation of the human models provided by the user. We improved the generation quality by introducing more audio features into the model so that the models can utilize more features for better results. Also, we overcame different difficulties in the rendering process, including applying the AI-generated numpy motion data to provided SMPL models and converting the animated SMPL models into usable FBX models. In general, the improved model generates motions that are more diverse and realistic than the base model, which provides dance motions that have higher quality.

INTRODUCTION

- Dance is a universal art form, but hard to master without training.

- AI offers a way to generate dance as a language, similar to how it processes text or images.

- AI Choreographer can generate dance from music but lacks usability and realism.

- Our improvements include more detailed audio analysis and an automated rendering pipeline.

- Final output: a fully animated, ready-to-use FBX model from just a music input.

RELATED WORKS

- 3D dance generation has been explored using CNNs and Transformers.

- AI Choreographer (FACT model) uses AIST++ dataset and a Transformer-based pipeline.

- EDGEis another model using diffusion and editable parameters for realism.

- Transformer models are powerful in capturing sequence data like language and motion.

- Audio features like MFCC and Chroma help models learn rhythm and melody patterns.

BACKGROUNDS

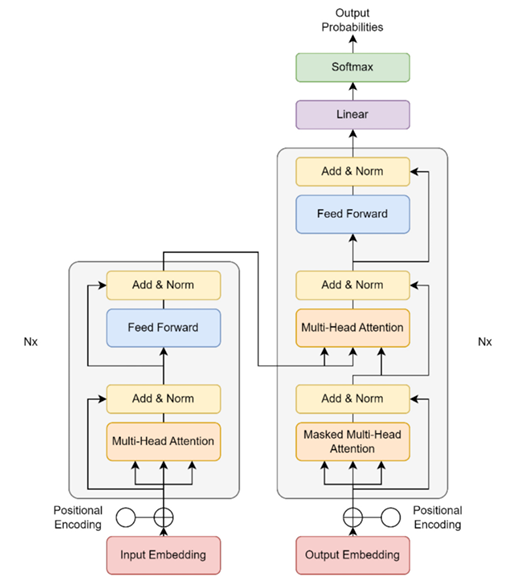

Transformer Model

- Based on attention mechanism using Query, Key, and Value vectors.

- Multi-head attention allows the model to learn relationships from different angles.

- Encoder-decoder structure maps audio to dance sequence using layer stacks.

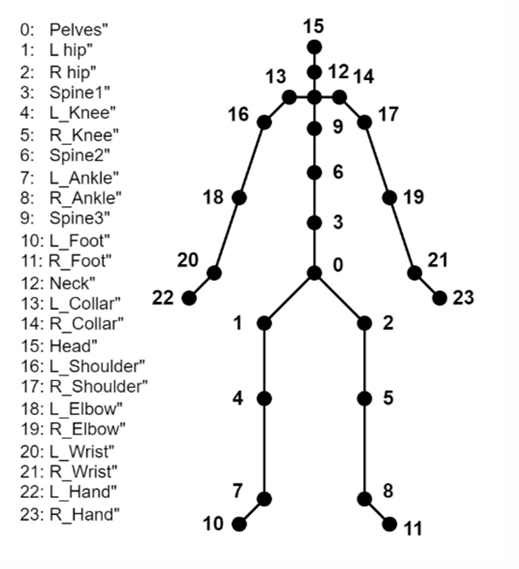

SMPL Model

- Represents 3D human body with joint rotations and body shape control.

- Supports multiple body types using shape parameters (β).

- Calculates joint transformations and skinning to animate mesh in Blender

Music Features

- Two main categories: Spectral features (e.g., MFCC, Chroma) and Rhythmic features (e.g., tempo, beats)

- These features are used to guide motion generation more accurately

The Visual Model of the Transformer Model

Joints used by the converter to incorporate the

generated motions into FBX models.

The corresponding joint and the numbering are listed on the left, and the positions of each joint are presented on the right

METHODS

Music Feature Extraction

- Used Librosa to extract MFCC, Chroma, envelope, tempo, spectral centroid, and more.

- Preprocessing steps include FFT, Mel filters, log scaling, and DCT for MFCCs.

- Rhythmic elements like beat and tempo help align motion with music flow.

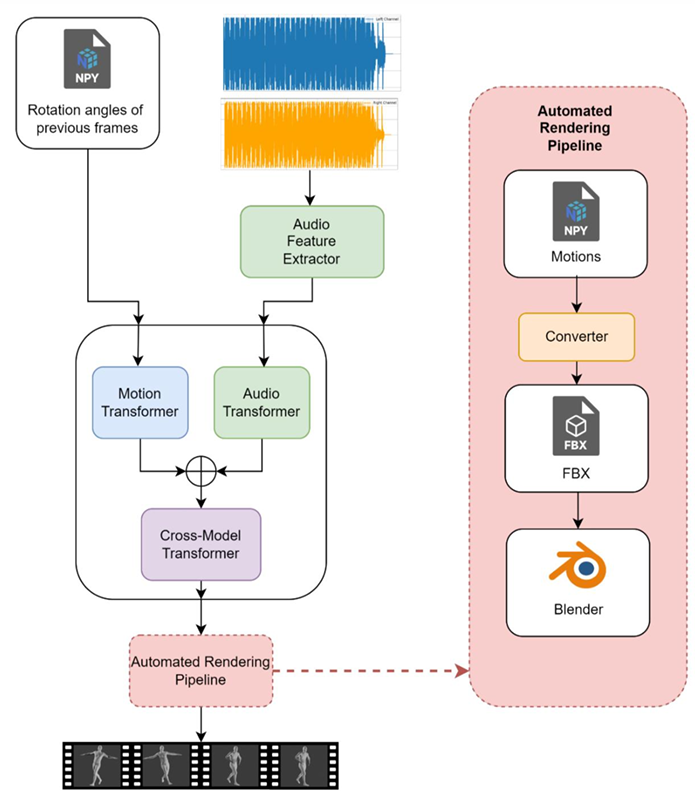

Dance Pose Generation

- Combines audio and motion transformers to generate next-frame poses.

- Takes extracted features and current pose to predict future motion in NumPy format.

- Output format is joint angles for use in animation rendering.

3D Animation Rendering

- Developed a custom Blender plugin to apply NumPy pose data to SMPL models.

- Automatically converts SMPL into FBX files with full animation data.

- Outputs are compatible with Blender, Unity, Unreal, and other 3D tools.

The main processes of Dance Poses Generation. Rotation angles and

extracted audio features are input into the model to generate new dance

motions, and the generated dance motion in the form of NPY file will be

fed into the automated rendering pipeline for model rendering. The

automated rendering pipeline will apply the generated motion onto an FBX

model and render inside Blender

RESULTS

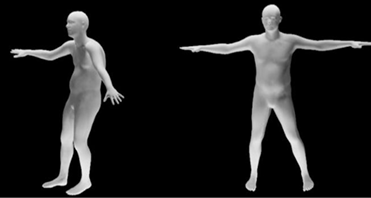

Motion Comparison between the base model (right)

and our improved model (left) on the same frame

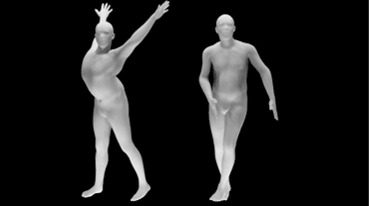

Motion Comparison between the base model (right)

and our improved model (left) on the same frame

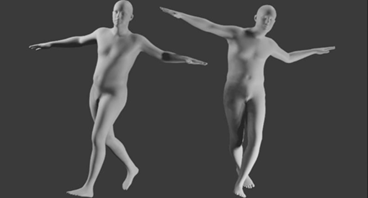

Motion Comparison between the base model (left)

and our improved model (right) on the same frame

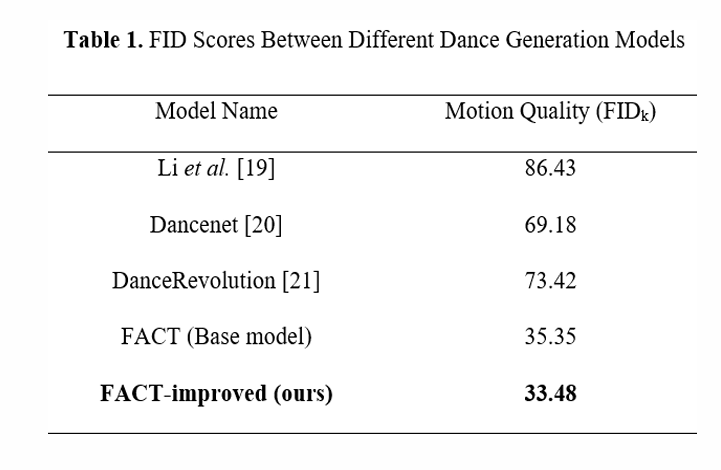

Note: The realism and quality of generation can be measured by FID scores. Lower FID scores represent more realistic motion. The data of our base model and other models are from the paper of the base model.

OBJECTIVES

- Improve AI-generated 3D dance motions in realism and diversity

- Enhance music-motion synchronization by incorporating richer audio features

- Develop a usable rendering pipeline to convert AI motion data into animated 3D models

- Make the system accessible for creators in dance, games, and animation

CONCLUSIONS

- Our enhanced AI Choreographer produces more diverse and realistic dance motions.

- Richer audio input improves beat-following and pose variety.

- The rendering pipeline allows direct output into standard 3D formats, making the tool practical.

- This work makes AI-generated dance more accessible for creative, educational, and industrial use.

FUTURE WORKS

- Expand model compatibility with other 3D character formats beyond SMPL

- Integrate more advanced music understanding (e.g. emotion or genre classification)

- Improve real-time generation and rendering capabilities for live applications

- Add a user-friendly interface to allow broader access by non programmers

- Explore transfer to physical robots or AR/VR environments for embodied dance

REFERENCES

Vaswani, Ashish, et al. “Attention is all you need.” Advances in Neural Information Processing Systems 30 (2017).

Li, Ruilong, et al. “AI Choreographer: Music Conditioned 3D Dance Generation with AIST++.” Proceedings of the IEEE/CVF International Conference on Computer Vision. 2021.

Li, Ruilong, Shan Yang, David A. Ross, Angjoo Kanazawa. AI Choreographer: Music Conditioned 3D Dance Generation with AIST++. ICCV, 2021.

McFee, Brian, et al. “librosa: Audio and Music Signal Analysis in Python.” SciPy (2015).

Loper, Matthew, et al. “SMPL: A skinned multi-person linear model.” Seminal Graphics Papers: Pushing the Boundaries, Volume 2. 2023. 851–866.

Li, Jiaman, et al. “Learning to generate diverse dance motions with transformer.” arXiv preprint arXiv:2008.08171, 2020.

Zhuang, Wenlin, et al. “Music2Dance: Music-driven dance generation using WaveNet.” arXiv preprint arXiv:2002.03761, 2020.

Huang, Ruozi, et al. “Dance Revolution: Long-term dance generation with music via curriculum learning.” International Conference on Learning Representations, 2021.